- Multi-agent model with extended deliberation for complex queries

- Benchmark improvements: 34,8% in HLE and 87,6% in LiveCodeBench 6

- Access for AI Ultra plan subscribers at $250/month

- Slower but higher quality responses, with daily usage limits

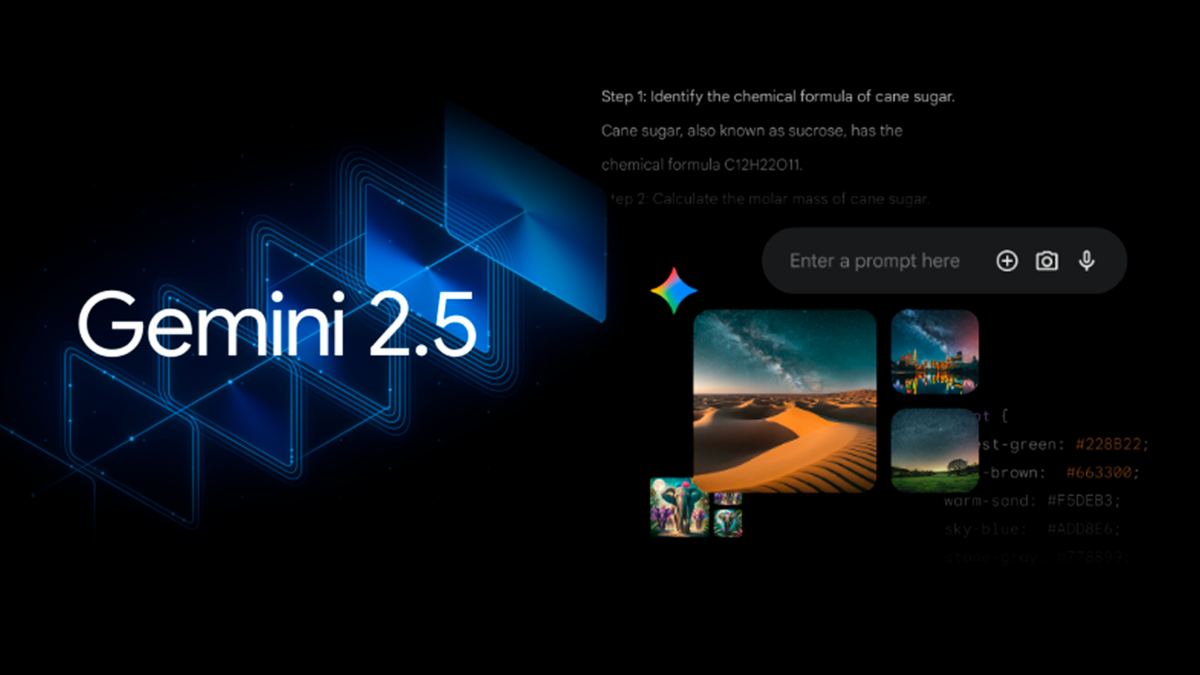

Google begins rolling out Gemini 2.5 Deep Think to its Gemini app., a reasoning-focused iteration that the company describes as its most advanced system to date. This model is designed for particularly complex questions, where you need to spend several minutes analyzing options before proposing a solution..

Access is restricted to those who pay for the AI Ultra plan ($250/month)., a decision linked to the high computational cost of its operation. It's not a standalone experiment: it's integrated into the Gemini ecosystem and is activated when the task requires deeper reflection. To learn more about Google's advances in AI and the integration of Gemini, you can visit This article about Google's advanced AI features.

What is Deep Think and how does it work?

Deep Think builds on the foundation of Gemini 2.5 Pro but stretches the thinking time and parallel analysis.. Instead of sticking to one route, generate several approaches, contrast hypotheses beyond the first option, and readjust your reasoning before deciding.Google also claims to have incorporated new reinforcement techniques to optimize these thought pathways.

It does not appear as a separate model in the selector: By choosing Gemini 2.5 Pro, Deep Think is activated as an integrated tool in flows such as Canvas or Deep Research when the query requires it.. In the background, the system can Use code execution and Google Search automatically to support his reasoning. To see how this works in practice, check out .

His approach is clearly multimodal and is geared toward tasks that combine text, images, and other cues. In internal testing, Google highlights improvements in web development, iterative design, scientific reasoning, and step-by-step planning, with longer, more detailed responses when complexity warrants.

Performance, access and limits

In benchmarks, the figures place Deep Think ahead of direct competitors. according to Google data: it reaches a 34,8% on Humanity's Last Exam (HLE, no tools), compared to 25,4% for Grok 4 and 20,3% for o3; and achieves a 87,6% in LiveCodeBench 6, surpassing Grok 79's 4% and o72's 3% in competitive programming.

Mathematics is one of his strong pointsIt performs particularly well in AIME, and a variant trained to reason for hours earned a gold medal at the International Mathematical Olympiad (IMO). The standard version, closer to a consumer product, is expected to win a bronze medal at the IMO 2025, according to the company. To better understand how Google develops mathematical capabilities in its models, check out this analysis of AI and math.

Access is conditioned by its execution costEach response can take several minutes and consume many more resources than a traditional model. That's why Google offers it in the AI Ultra plan ($250/month), maintains a daily limit on queries (unspecified and subject to change), and is beginning to open it up in a controlled manner in the app and to a group of developers via API.

The deployment also reopens the debate on the accessibility of cutting-edge AI.Several companies are exploring multi-agent systems for their quality results, but they are more expensive to maintain, resulting in limited availability. The company maintains that this phased deployment ensures quality and collects real feedback without overloading the infrastructure.

Deep Think is committed to thinking more and better at the expense of time and resources.: Multi-agent, extended deliberation, and automated use of tools to raise the bar on difficult questions, with solid benchmark results and limited access to the premium range with quotas.